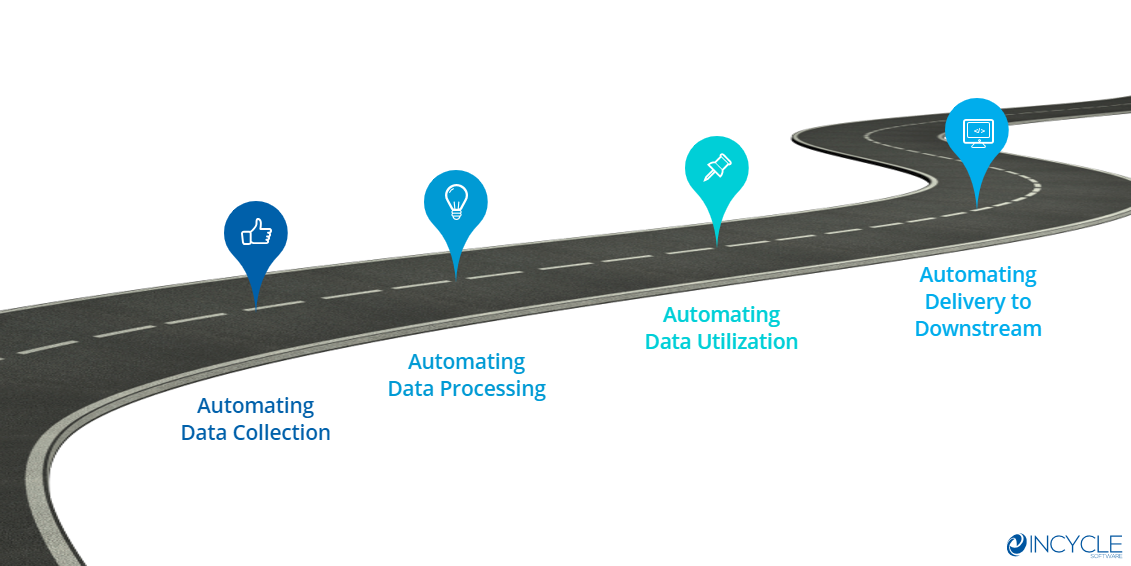

While Machine Learning and model creation involves some amount of programming, much of the work happens downstream of supporting data processes and tools. This brings us to today’s topic: What is a Data Pipeline?

A Data Pipeline is a scalable and reliable data processing solution. It should handle orchestration of data movement, transforms and ultimately store operations to a serving layer of some sort. Ideally, it is modular in architecture allowing adaptation to a variety of sources, languages and tools.

Learn more: Data Pipeline Enterprise Accelerator

Standing on the back of any data forward organization is a solid data management and engineering solution. Enterprise organizations have some of the most demanding data management requirements. In a world where every new feature release generates additional data to gather, track, report and gain insights from.

Key aspects to a scalable data pipeline architecture are:

- modularity

- idempotency

- independent resource scaling

- broad support of integrations and languages

- Data movement and ingestion patterns

- Data preparation and raw storage requirements

- Varying transform and standardization/normalization requirements

- Disparate primary storage and long-term retention needs

- Vastly different downstream consumption requirements

Solving these problems along the way as they come up with no cross-org awareness results in complex, difficult to maintain solutions that do little to ensure longevity of the data management/engineering strategy. In fact, most data management solutions are reactionary and ad-hoc despite the awareness that data driven business has proven to be a key differentiator in all industries in today’s software driven world.

InCycle believes there is a better way to design a Data Pipeline. We understand that making the right design choices earlier on will ultimately determine scalability and maintainability of the solution long term. With the need to support an ever-increasing volume of structured, unstructured, relational or time-series data. The implementation patterns in our approach treats the data engineering pipeline as another component in the DevOps culture, DataOps. Tightly integrating the ability to scale, increment to meet growing and shifting demands with the ability to measure, monitor and scale deployment of actionable insights to the organization in an automated manner.

Learn more: Data Pipeline Enterprise Accelerator

Areas we recommend maintaining cognizance of during design are:- Identify an orchestrator

- Identify ingestion requirements (where/to from, when?)

- Identify a collection point/format

- Identify target transforms and normalization requirements (format, retention, compliance etc.)

- Identify downstream consumption scenarios (reports, other apps, machine learning, analytics ,trending etc..)

At each one of these touch points we recommend identifying stakeholders of upstream and downstream processes and outcomes. It is recommended to identify governance requirements as well as data handling can have vastly different needs depending on what type of data it is and after-thought approaches to compliance requirements can be costly to key resources.

Ultimately the right design to a Data Pipeline can be the differences between business differentiation and stagnation as internal teams battle to get the correct plumbing in place each time a new business need arises. The wrong choices can be the differences between downstream processes as well, such as a successful MLOps strategy.

So, What is MLOps?

MLOps is to machine learning what DevOps is to software development. Our next post will address MLOps and guide you through the journey of market differentiation through data management excellence.

Looking to learn more faster? Check out our MLOps Enterprise Accelerator.